Possible bug in wetdry.F for nested apps

Possible bug in wetdry.F for nested apps

Hi,

I read wetdry.F, and was wondering if the index boundaries should account for the contact regions in nested applications? Currently, there are ranges like "JstrR,JendR" etc., but shouldn't they be "JstrT,JendT" instead?

Thanks!

Regards, Stefan

I read wetdry.F, and was wondering if the index boundaries should account for the contact regions in nested applications? Currently, there are ranges like "JstrR,JendR" etc., but shouldn't they be "JstrT,JendT" instead?

Thanks!

Regards, Stefan

- arango

- Site Admin

- Posts: 1383

- Joined: Wed Feb 26, 2003 4:41 pm

- Location: DMCS, Rutgers University

- Contact:

Re: Possible bug in wetdry.F for nested apps

Hmm. I will have to think about it but maybe it will be needed. It will need to be tested. There are a set of those indices for RHO-, PSI-, U-, and V-points. It will be only relevant it coastlines are present in the contact points.

Re: Possible bug in wetdry.F for nested apps

we made some updates in those exchanges as well, but it had to deal with the masking that was used. Either Dave or I will send you the changes in a bit. we have some applications that we are working on first to test this out.

-j

-j

Re: Possible bug in wetdry.F for nested apps

Thanks for your replies!

I work on a 2d application, and another potentially relevant thing I just noticed is that the nesting boundary conditions for the refined grid use fields called e.g.

I think this is the case even in 2d applications. However, I think that this field is never calculated in nesting.F for 2d applications. Could this be true? I only checked for the Rutgers code, maybe you have already changed this in COAWST?

I haven't tested it, but it could explain why I always have zero influx of u/vbar at my refined (open) boundaries. Looking into this was actually the reason why I stumbled across the wet-mask issue. I printed out GRID(ng)%umask_wet(i,j) in subroutine put_refine2d(), and it was always zero. After modifying the index ranges in wetdry.F, the umask_wet was "2" for wet-wet neighbours, as it's supposed to be (disregarding the fact that in that subroutine, there should perhaps only appear binary masks, but that's a different problem and I think I can work around this....). However, when I checked the history file, it *still* was zero flux at the boundary. The only trace I found so far is the boundary condition REFINED(cr)%DU_avg2(1,m,tnew).

Let me know if this makes any sense.

Regards, Stefan

I work on a 2d application, and another potentially relevant thing I just noticed is that the nesting boundary conditions for the refined grid use fields called e.g.

Code: Select all

REFINED(cr)%DU_avg2(1,m,tnew)

I haven't tested it, but it could explain why I always have zero influx of u/vbar at my refined (open) boundaries. Looking into this was actually the reason why I stumbled across the wet-mask issue. I printed out GRID(ng)%umask_wet(i,j) in subroutine put_refine2d(), and it was always zero. After modifying the index ranges in wetdry.F, the umask_wet was "2" for wet-wet neighbours, as it's supposed to be (disregarding the fact that in that subroutine, there should perhaps only appear binary masks, but that's a different problem and I think I can work around this....). However, when I checked the history file, it *still* was zero flux at the boundary. The only trace I found so far is the boundary condition REFINED(cr)%DU_avg2(1,m,tnew).

Let me know if this makes any sense.

Regards, Stefan

- arango

- Site Admin

- Posts: 1383

- Joined: Wed Feb 26, 2003 4:41 pm

- Location: DMCS, Rutgers University

- Contact:

Re: Possible bug in wetdry.F for nested apps

Notice that the indices told and tnew are local (dummy) time indices:

However, if you are running a 2D shallow-water application (SOLVE3D is off), why are you dealing with state variables (DU_avg1, DU_avg2, DV_avg1, DV_avg2, Zt_avg1) that are only need in 3D applications? Something is very wrong here. I have never run nesting in a 2D application. If this is the case, some SOLVE3D conditionals are missing in nesting.F.

Code: Select all

!

! Set time snapshot indices for the donor grid data.

!

told=3-RollingIndex(cr)

tnew=RollingIndex(cr)Re: Possible bug in wetdry.F for nested apps

I see, thanks. That DU_Avg field is actually appearing in u2dbc_im.F, for the "nested" type boundary conditions (in a 2D application). I substituted it there by a dummy value to test, and then the dummy value appears in my history file. Unfortunately I can only continue testing on Monday. Anyways, thanks for looking into it!

- arango

- Site Admin

- Posts: 1383

- Joined: Wed Feb 26, 2003 4:41 pm

- Location: DMCS, Rutgers University

- Contact:

Re: Possible bug in wetdry.F for nested apps

Well, u2dbc_im.F and v2dbc_im.F need to be modified to include code relevant to 2D shallow-water nesting applications. I guess that we never accounted for a User needing nesting in a 2D application. It will need an equation to impose the transport from the donor coarser grid. We will have to figure out what value to load into bry_val.

Re: Possible bug in wetdry.F for nested apps

The motivation would be to do storm surge modelling, in a way that is roughly consistent with other storm surge models such as SLOSH or ADCIRC (both mostly 2d, at least until a couple of years ago...I'm not sure about the newest developments). 3D is great, but having 2D for comparison would be very interesting. Please let me know if you disagree?I guess that we never accounted for a User needing nesting in a 2D application.

In the coming weeks I will try to better understand the algorithm, and the hints you gave are very helpful. I read the Wiki today and think that the DU/V_avg2 is the average of the barotropic flux over the entire baroclinic timestep, weighted with the secondary weights (centered roughly in between the baroclinic step). In the refinement nesting, these are imposed at the finer grid physical boundaries to have agreeing mass fluxes between donor and receiver. I think that agrees with how I understand the Nesting-wiki when it says:I guess that we never accounted for a User needing nesting in a 2D application. It will need an equation to impose the transport from the donor coarser grid. We will have to figure out what value to load into bry_val.

So maybe in a 2d setup, one could store the volume flux of the donor after each barotropic corrector step, and impose that during the 3 (or 5 etc...) time steps made in the receiver grid?In grid refinement applications, the information is exchanged at the beginning of the full time-step (top of main2d or main3d). Contrarily, in composite grid applications, the information is exchanged between each sub-time step call in main2d or main3d. That is, the composite donor and receiver grids need to sub-time step the 2D momentum equations before any of them start solving and coupling the 3D momentum equations. Therefore, the governing equations are solved and nested in a synchronous fashion.

Re: Possible bug in wetdry.F for nested apps

Hmm, I guess one should store two time levels of the donor volume flux along the boundary, and then do a temporal interpolation. Applying only a single time level may be inconsistent with the temporal interpolations of zeta and u/vbar in put_refine() ? Does this make any sense physically?So maybe in a 2d setup, one could store the volume flux of the donor after each barotropic corrector step, and impose that during the 3 (or 5 etc...) time steps made in the receiver grid?

So one could store two consecutive DUon/DVon at the end of step2d.F for the coarse grid, store them in get_persisted2() in the REFINED structure, and temporally interpolate in u/vdbc_im.F. Then, in put_refine(), one would have to prevent overwriting u/vbar at the physical fine-grid boundary (similar to what is done for the 3D case).

Re: Possible bug in wetdry.F for nested apps

I wrote a blog post [1] about my experience using ROMS in 2d setups with grid nesting and wetting/drying. Very early stage, but if anybody is interested in this, I appreciate comments/suggestions, in particular if you are working on new code that is being pushed to the repository in the near future.

Currently I'm doing the following:

1) Updated array bounds in the file wetdry.F to include any contact regions.

2) New masks for ubar and vbar. I simply store the coefficient cff7 used to mask u/vbar in step2d_LF_AM3.h. The new masks are submitted as arguments to the function fine2coarse2d(), instead of the previous u/vmask_full. The new masks also serve for the computation of the interpolation weights used in populating the child grid with parent grid information.

3) Don't apply interpolation onto the coarse grid where the coarse grid is dry.

4) The child grids are forced at the boundary with the latest instantaneous barotropic volume flux of the parent grid (first option of my previous post.

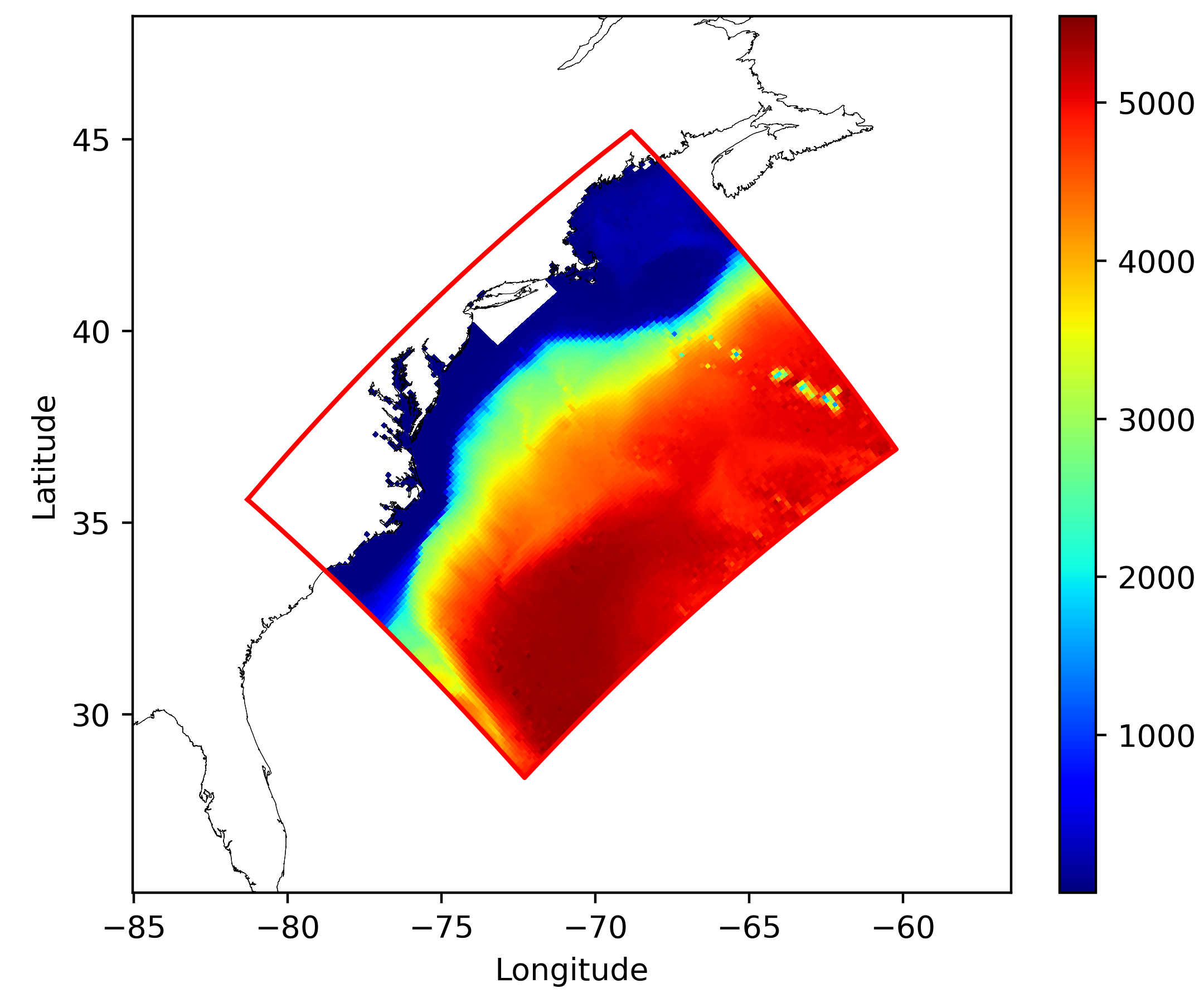

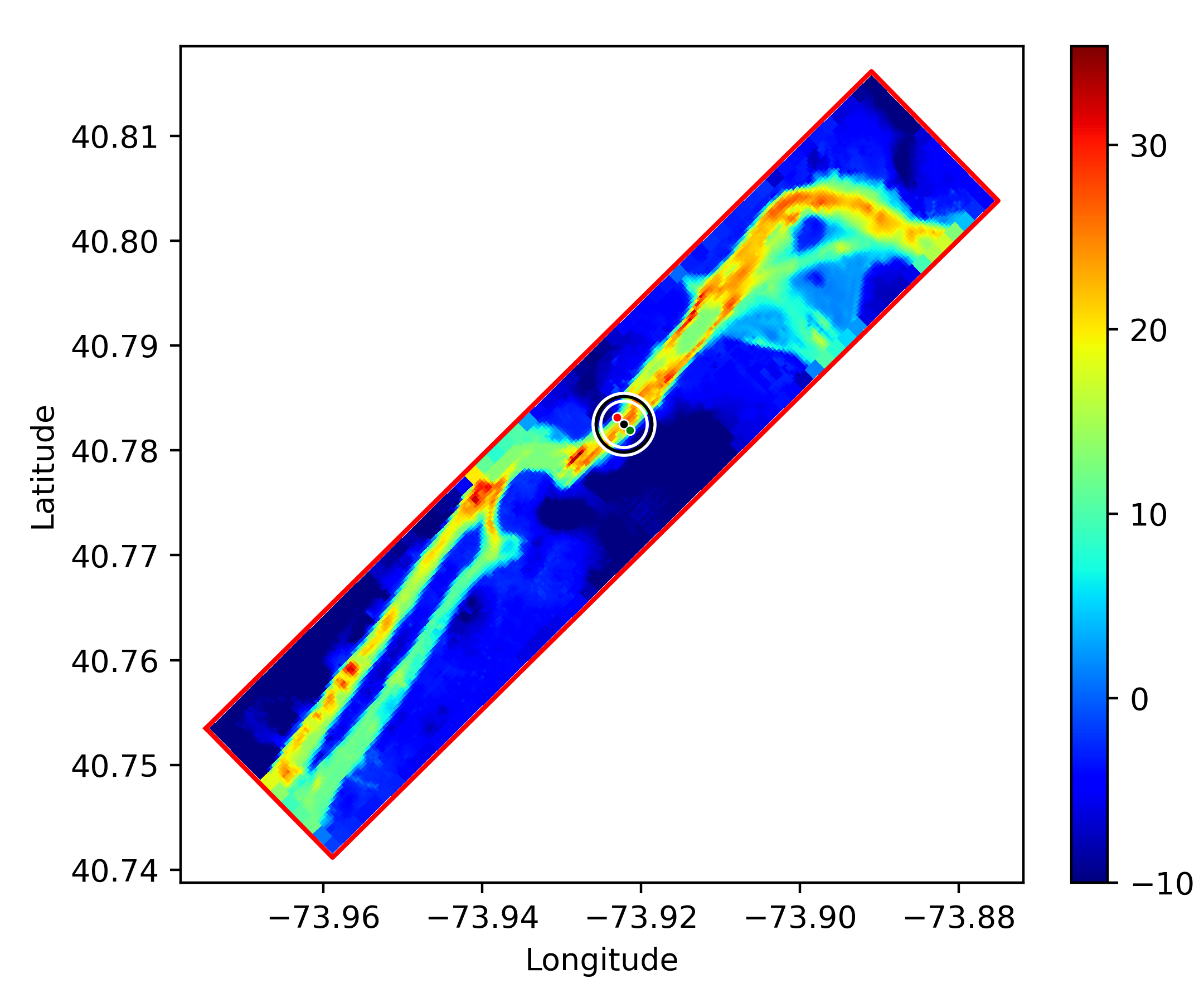

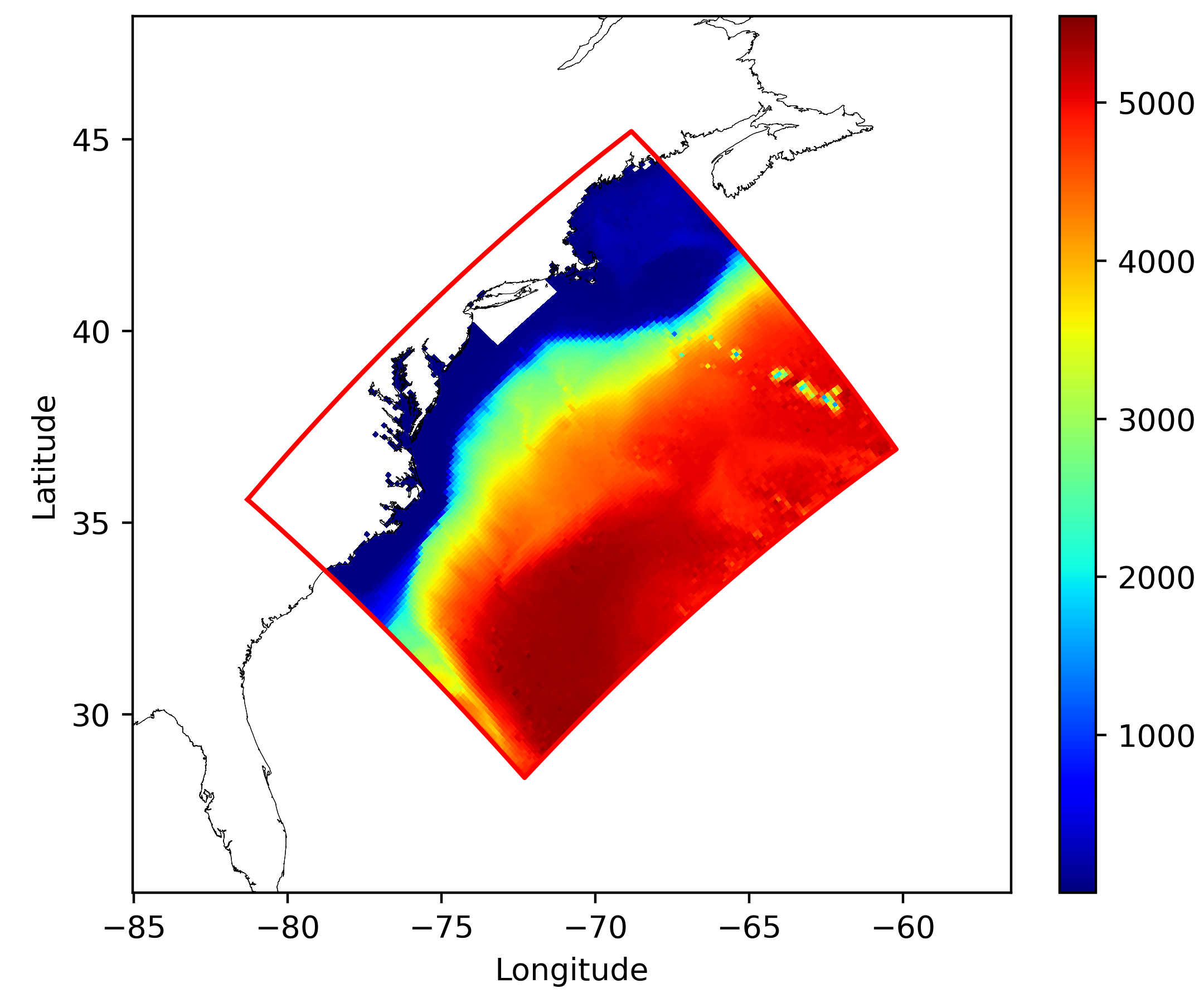

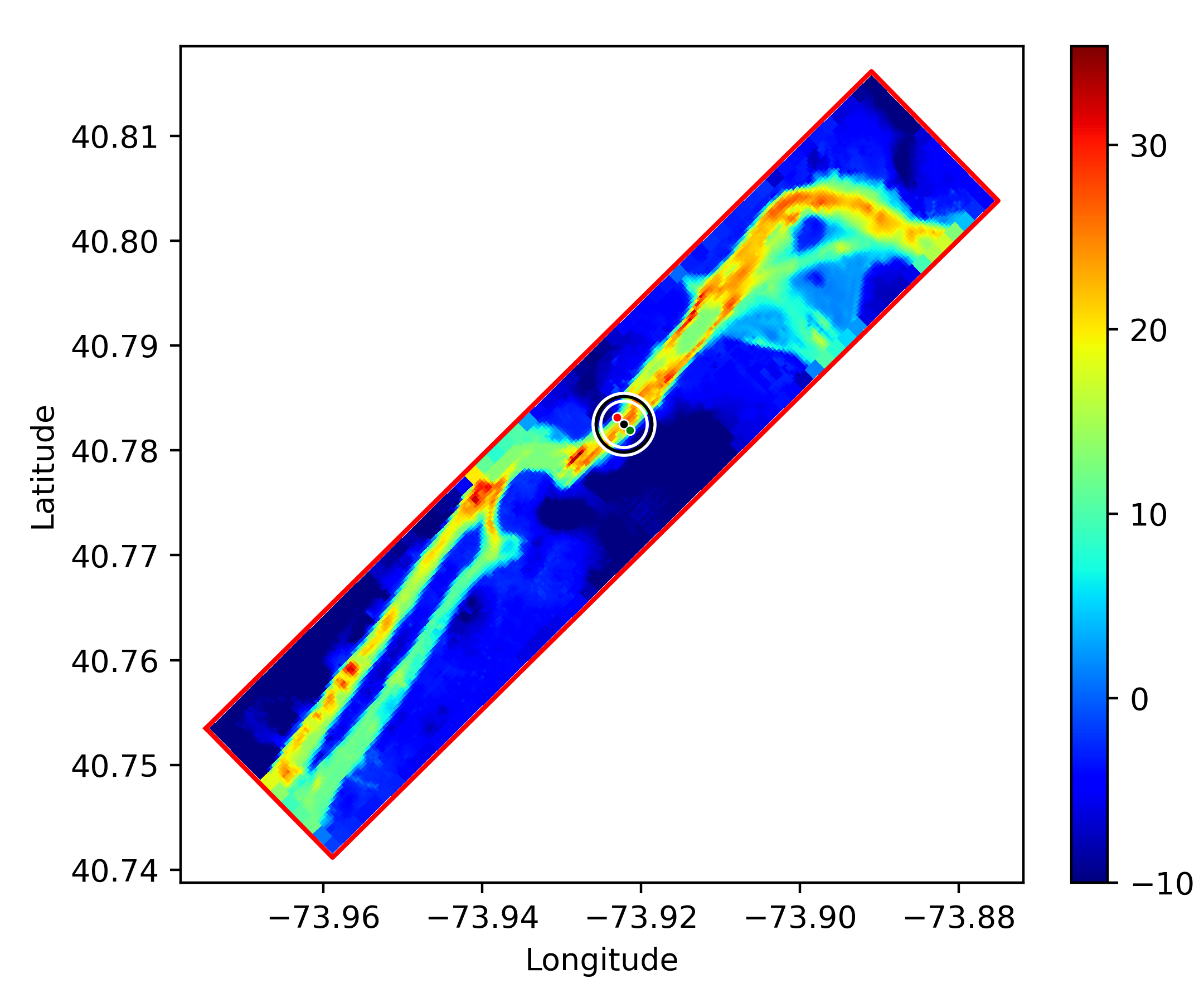

As a test I'm trying to simulate tidal flow in the East River, NY, by scaling down from a large grid (12 km)

to a small grid of the East River (32 m, cropped Hudson/Harlem rivers for preliminary testing)

It was important for me to use realistic (although severely cropped) bathymetry, so I get to know the wetting/drying algorithm better.

More details on researchgate:

[1] https://www.researchgate.net/publicatio ... tingdrying

Currently I'm doing the following:

1) Updated array bounds in the file wetdry.F to include any contact regions.

2) New masks for ubar and vbar. I simply store the coefficient cff7 used to mask u/vbar in step2d_LF_AM3.h. The new masks are submitted as arguments to the function fine2coarse2d(), instead of the previous u/vmask_full. The new masks also serve for the computation of the interpolation weights used in populating the child grid with parent grid information.

3) Don't apply interpolation onto the coarse grid where the coarse grid is dry.

4) The child grids are forced at the boundary with the latest instantaneous barotropic volume flux of the parent grid (first option of my previous post.

As a test I'm trying to simulate tidal flow in the East River, NY, by scaling down from a large grid (12 km)

to a small grid of the East River (32 m, cropped Hudson/Harlem rivers for preliminary testing)

It was important for me to use realistic (although severely cropped) bathymetry, so I get to know the wetting/drying algorithm better.

More details on researchgate:

[1] https://www.researchgate.net/publicatio ... tingdrying

Last edited by stef on Thu Oct 24, 2024 2:11 pm, edited 1 time in total.

Re: Possible bug in wetdry.F for nested apps

this looks cool! i looked at your paper:

https://sriha.net/articles/storm-surge- ... ing-drying

and you are really digging into the fine details. dont think many people have cascaded down from km to m scales. nice to see.

https://sriha.net/articles/storm-surge- ... ing-drying

and you are really digging into the fine details. dont think many people have cascaded down from km to m scales. nice to see.

- arango

- Site Admin

- Posts: 1383

- Joined: Wed Feb 26, 2003 4:41 pm

- Location: DMCS, Rutgers University

- Contact:

Re: Possible bug in wetdry.F for nested apps

Great work, Stefan. I concur with john about your telescoping approach into meter scales. It will take me some time to digest the changes that you make to the code. If you provide us the modifications that you have to do for the shallow-water nested applications to work, we can incorporate such changes to the code. The wetting and drying are problematic since we don't have a way to implement it from first principles in the governing equations. Therefore, we need to follow an engineering approach to implement it numerically in ROMS. I know that John and colleagues are working on a more robust approach to wetting and drying.

Re: Possible bug in wetdry.F for nested apps

Hi,

Thanks for your email, I'll respond tomorrow, meanwhile I attached the patch. Please note:

*) Some (much?) of this may be incorrect. I'm a beginner. My approach was "if I get a reasonable result, it's good enough for now".

*) I have not really understood MPI yet, in particular, in the end of step2d I'm doing things I'm not sure are right. I just copied some patterns I saw in your code.

*) I did not fix the possible "bug" reported here:

viewtopic.php?f=14&t=5438&p=21072#p21072

I did fix it in serial mode, but then realized that what I did, did not work in parallel mode. If I remember correctly, the coarse-grid boundary data was not available from all tiles needed in the fine grid. There was always a little segment that wasn't properly processed. Instead I modified the fine grid to be identical to the coarse-grid topography at the boundary (visible in the figures above), which is perhaps a prudent thing to do anyways.

*) The patch is with respect to git commit 'c23f66eb118e', or "src 826".

826".

Thanks for your email, I'll respond tomorrow, meanwhile I attached the patch. Please note:

*) Some (much?) of this may be incorrect. I'm a beginner. My approach was "if I get a reasonable result, it's good enough for now".

*) I have not really understood MPI yet, in particular, in the end of step2d I'm doing things I'm not sure are right. I just copied some patterns I saw in your code.

*) I did not fix the possible "bug" reported here:

viewtopic.php?f=14&t=5438&p=21072#p21072

I did fix it in serial mode, but then realized that what I did, did not work in parallel mode. If I remember correctly, the coarse-grid boundary data was not available from all tiles needed in the fine grid. There was always a little segment that wasn't properly processed. Instead I modified the fine grid to be identical to the coarse-grid topography at the boundary (visible in the figures above), which is perhaps a prudent thing to do anyways.

*) The patch is with respect to git commit 'c23f66eb118e', or "src

Re: Possible bug in wetdry.F for nested apps

I should say that the "mod_stef.F" is very messy. Sorry.  The only variables needed are the ones used in step2d.

The only variables needed are the ones used in step2d.

Re: Possible bug in wetdry.F for nested apps

Update: note that there is a problem with the patch in that the wet/dry flux limiter (i.e. suppress outflow from a dry cell) does not work at the boundary. An already dry cell adjacent to the boundary may still export water out of the domain, depending on the boundary condition. It could be fixed simply by applying the fine grid wetmask, but then the volume fuxes between the coarse and fine grids don't agree anymore